In this example, we will be counting the number of lines with character 'a' or 'b' in the README.md file. Now that you know enough about SparkContext, let us run a simple example on PySpark shell. The first two lines of any PySpark program looks as shown below − Profiler_cls − A class of custom Profiler used to do profiling (the default is ).Īmong the above parameters, master and appname are mostly used.

Gateway − Use an existing gateway and JVM, otherwise initializing a new JVM. Set 1 to disable batching, 0 to automatically choose the batch size based on object sizes, or -1 to use an unlimited batch size.Ĭonf − An object of L to set all the Spark properties. py files to send to the cluster and add to the PYTHONPATH.Įnvironment − Worker nodes environment variables.īatchSize − The number of Python objects represented as a single Java object. SparkHome − Spark installation directory. Master − It is the URL of the cluster it connects to. The following code block has the details of a PySpark class and the parameters, which a SparkContext can take.įollowing are the parameters of a SparkContext. By default, PySpark has SparkContext available as ‘sc’, so creating a new SparkContext won't work. SparkContext uses Py4J to launch a JVM and creates a JavaSparkContext.

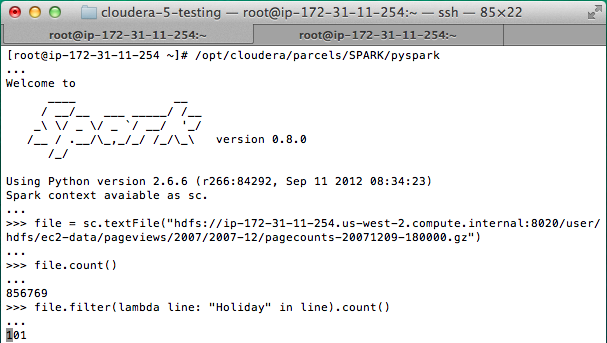

Spark url extractor python driver#

The driver program then runs the operations inside the executors on worker nodes. When we run any Spark application, a driver program starts, which has the main function and your SparkContext gets initiated here. SparkContext is the entry point to any spark functionality.

0 kommentar(er)

0 kommentar(er)